Expanding Scene Graph Boundaries: Fully Open-vocabulary Scene Graph Generation via Visual-Concept Alignment and Retention

Zuyao Chen1,2, Jinlin Wu2,3, Zhen Lei2,3,4, Zhaoxiang Zhang2,3,4, Changwen Chen1

1The Hong Kong Polytechnic University 2Centre for Artificial Intelligence and Robotics, HKISI-CAS 3Institute of Automation, CAS 4University of Chinese Academy of Sciences

Abstract

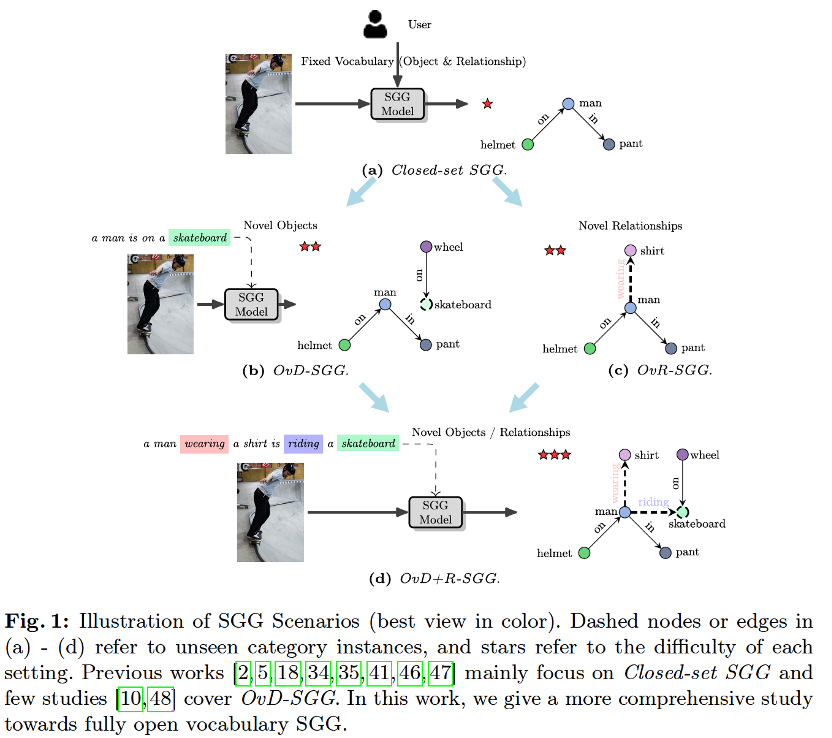

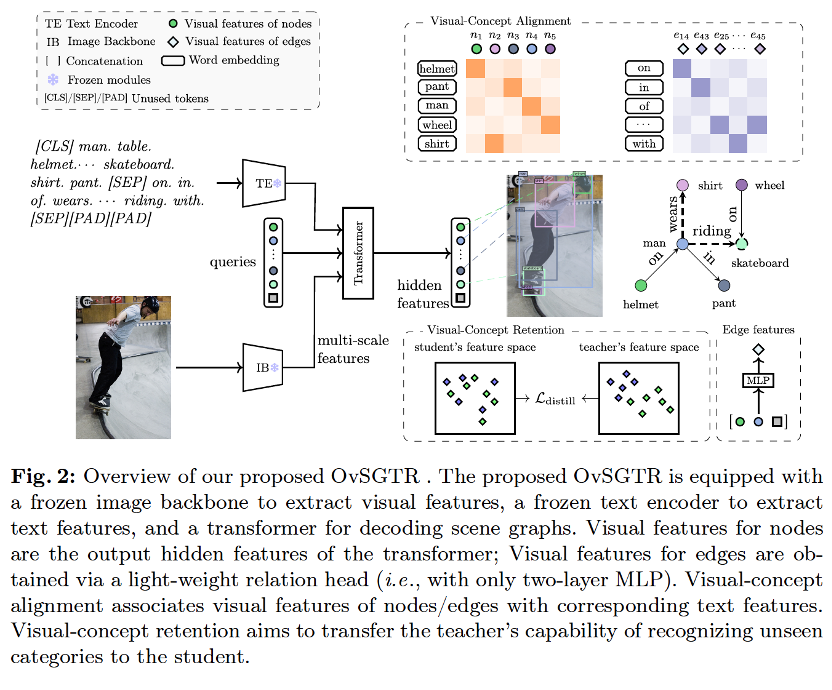

Scene Graph Generation (SGG) offers a structured representation critical in many computer vision applications. Traditional SGG approaches, however, are limited by a closed-set assumption, restricting their ability to recognize only predefined object and relation categories. To overcome this, we categorize SGG scenarios into four distinct settings based on the node and edge: Closed-set SGG, Open Vocabulary (object) Detection-based SGG (OvD-SGG), Open Vocabulary Relation-based SGG (OvR-SGG), and Open Vocabulary Detection + Relation-based SGG (OvD+R-SGG). While object-centric open vocabulary SGG has been studied recently, the more challenging problem of relation-involved open-vocabulary SGG remains relatively unexplored. To fill this gap, we propose a unified framework named OvSGTR towards fully open vocabulary SGG from a holistic view. The proposed framework is an end-to-end transformer architecture, which learns a visual-concept alignment for both nodes and edges, enabling the model to recognize unseen categories. For the more challenging settings of relation-involved open vocabulary SGG, the proposed approach integrates relation-aware pre-training utilizing image-caption data and retains visual-concept alignment through knowledge distillation. Comprehensive experimental results on the Visual Genome benchmark demonstrate the effectiveness and superiority of the proposed framework. Our code is available at link.

Motivation

Q1: Can the model predict unseen objects or relationships?

Q2: What if the model encounters both unseen objects and unseen relationships?

Method

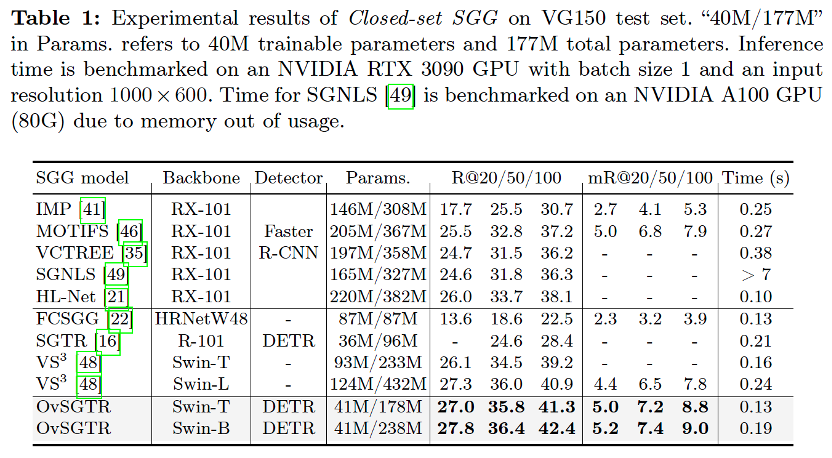

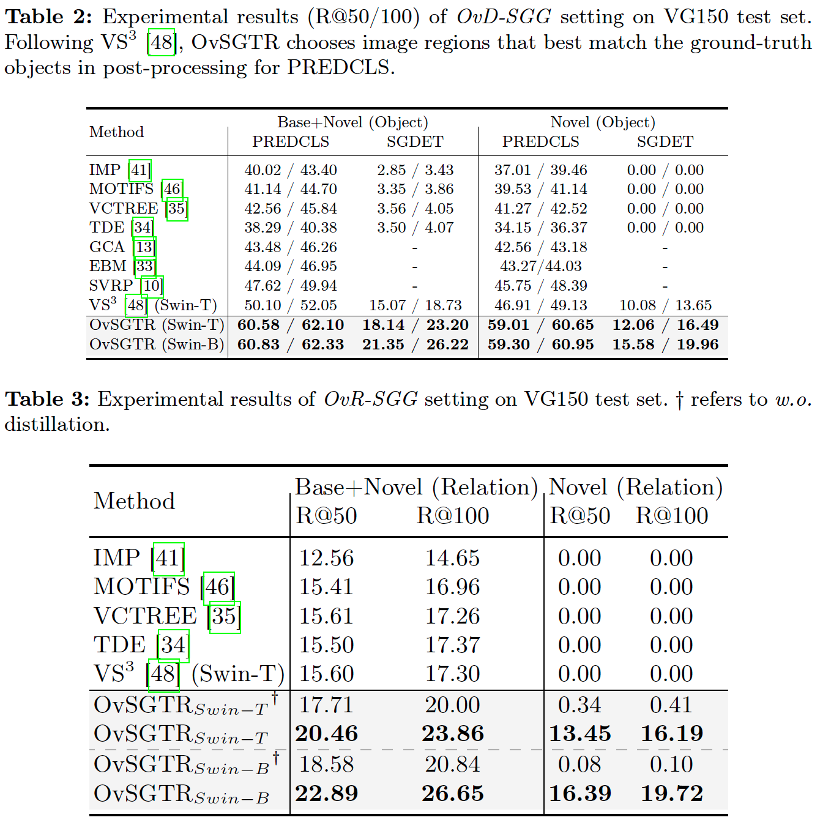

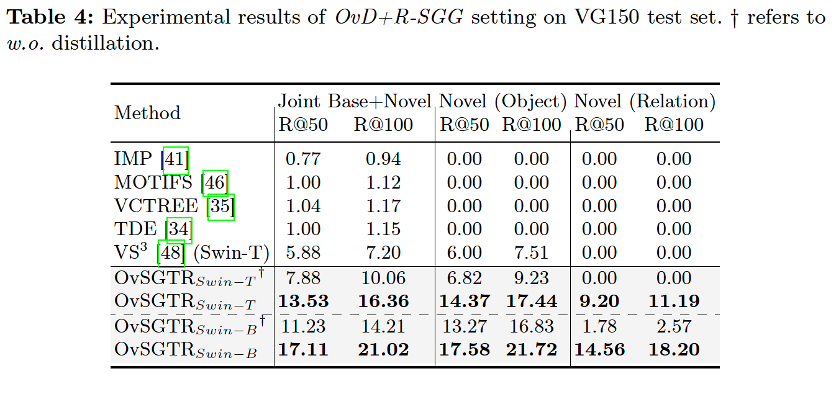

Results

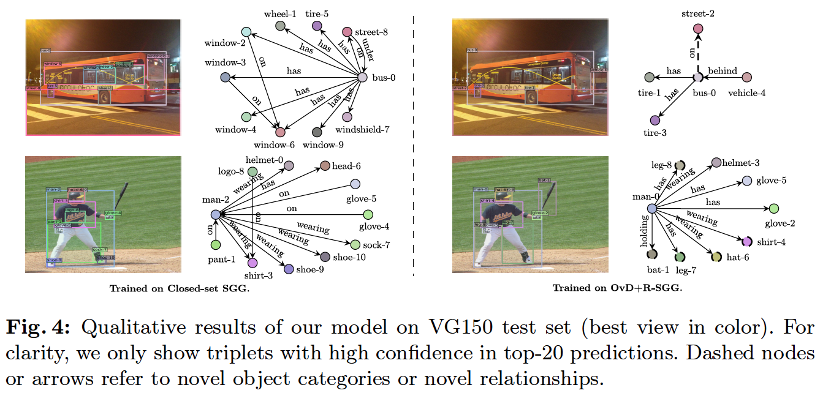

Visualizations

Citation

Please kindly cite our paper if you find this project helpful.

@inproceedings{chen2024expanding,

title={Expanding Scene Graph Boundaries: Fully Open-vocabulary Scene Graph Generation via Visual-Concept Alignment and Retention},

author={Chen, Zuyao and Wu, Jinlin and Lei, Zhen and Zhang, Zhaoxiang and Chen, Changwen},

booktitle={European Conference on Computer Vision},

year={2024},

organization={Springer}

}