Domain-Agnostic Crowd Counting via Uncertainty-Guided Style Diversity Augmentation

Guanchen Ding1, Lingbo Liu2, Zhenzhong Chen3, Changwen Chen1

1The Hong Kong Polytechnic University 2Peng Cheng Laboratory 3Wuhan University

Abstract

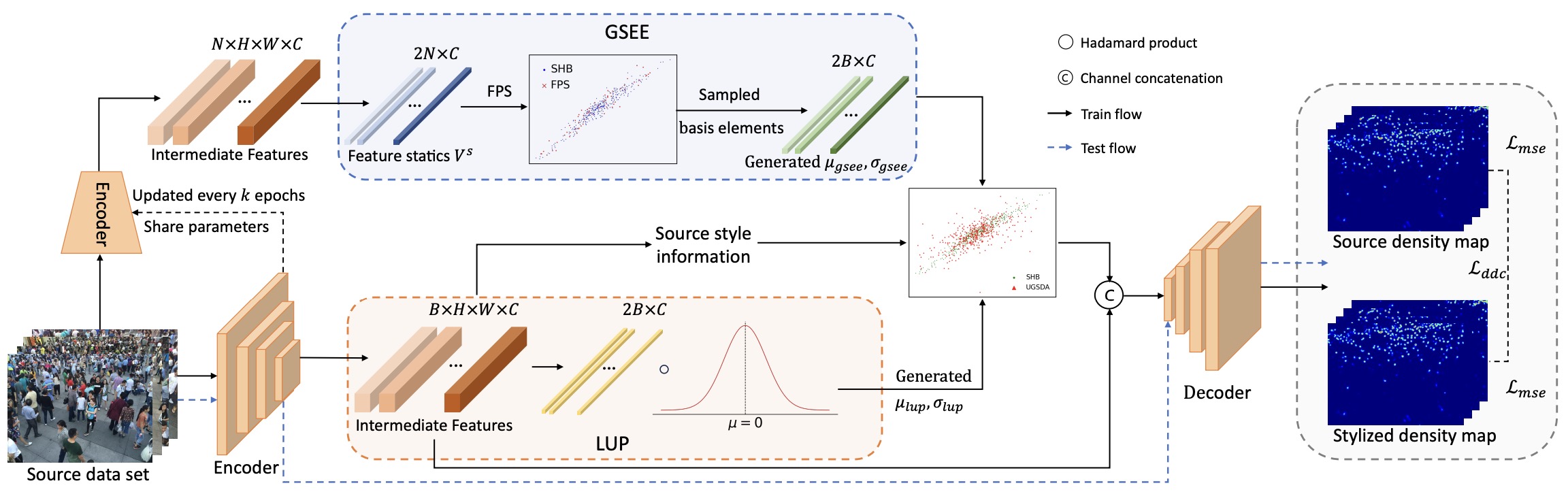

Domain shift poses a significant barrier to the performance of crowd counting algorithms in unseen domains. While domain adaptation methods address this challenge by utilizing images from the target domain, they become impractical when target domain images acquisition is problematic. Additionally, these methods require extra training time due to the need for fine-tuning on target domain images. To tackle this problem, we propose an Uncertainty-Guided Style Diversity Augmentation (UGSDA) method, enabling the crowd counting models to be trained solely on the source domain and directly generalized to different unseen target domains. It is achieved by generating sufficiently diverse and realistic samples during the training process. Specifically, our UGSDA method incorporates three tailor-designed components: the Global Styling Elements Extraction (GSEE) module, the Local Uncertainty Perturbations (LUP) module, and the Density Distribution Consistency (DDC) loss. The GSEE extracts global style elements from the feature space of the whole source domain. The LUP aims to obtain uncertainty perturbations from the batch-level input to form style distributions beyond the source domain, which used to generate diversified stylized samples together with global style elements. To regulate the extent of perturbations, the DDC loss imposes constraints between the source samples and the stylized samples, ensuring the stylized samples maintain a higher degree of realism and reliability. Comprehensive experiments validate the superiority of our approach, demonstrating its strong generalization capabilities across various datasets and models. Code is available at link.

Method

we propose an Uncertainty-Guided Style Diversity Augmentation (UGSDA) method for domain-agnostic crowd counting. The proposed UGSDA method comprises a Global Style Element Extraction (GSEE) module, a Local Uncertainty Perturbation (LUP) module and the Density Distribution Consistency (DDC) loss. The GSEE module represents the C-dimensional style space by sampling C global style elements.To address the potential limitations of source domain data in capturing all possible styles, we employ the LUP during the generation process of each training batch to introduce uncertainty into the global style elements, thereby enabling the creation of a diverse set of styles. LUP calculates the mean and variance at the batch level for the images, and samples from a Gaussian distribution to obtain perturbation values for the global style elements. To prevent the stylized samples from deviating too much from real-world scenarios, we also employ the proposed DDC loss to regularize the difference between the density distribution of real samples and stylized samples in the high-dimensional space. These components together generate sufficiently diverse samples during the training process, thereby enhancing the model's generalization capabilities.

Results

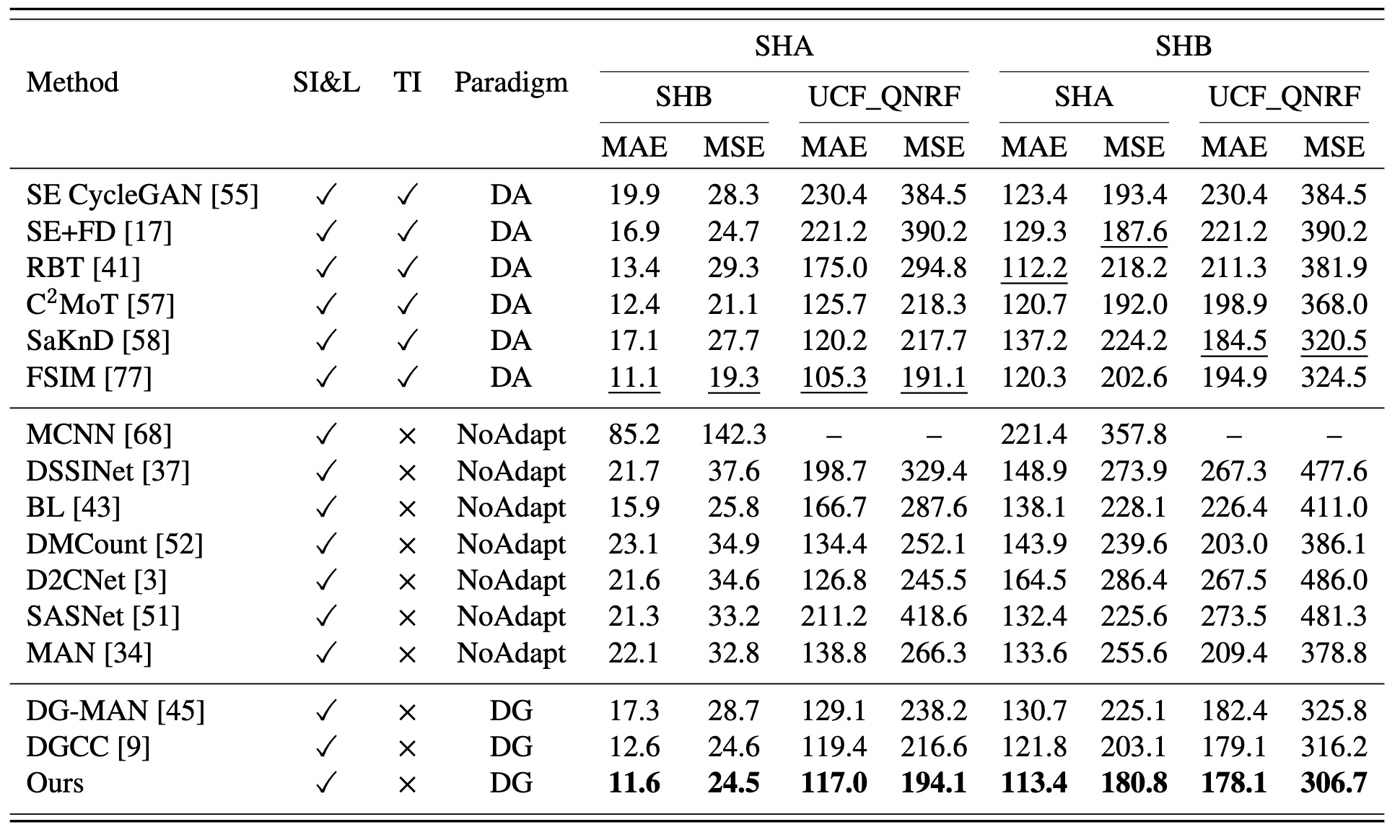

Quantitative comparisons of domain generalization with SHA and SHB as the source domains.

Quantitative comparisons of domain generalization with SHA, SHB, and UCF_QNRF as the source domains.

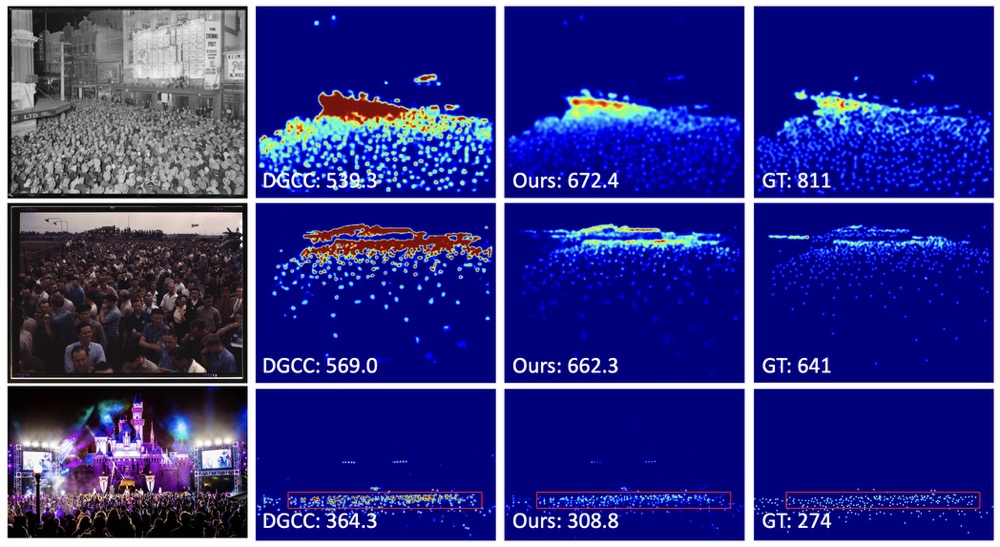

Visualizations

Citation

Please kindly cite our paper if you find this project helpful.

@inproceedings{ding2024domain,

title={Domain-Agnostic Crowd Counting via Uncertainty-Guided Style Diversity Augmentation},

author={Ding, Guanchen and Liu, Lingbo and Chen, Zhenzhong and Chen, Chang Wen},

booktitle={ACM Multimedia 2024},

year={2024}

}